The Context

For the second Lixo Journal Club article series, we’ll talk about Deep Bayesian Active Learning with Image Data by Gal and al., presented at the International Conference on Machine Learning in 2017*.*

At Lixo and for many applications involving machine learning and neural networks, one straightforward way to improve the performance of your model is to add more annotated data to the training dataset. However, annotating new data is costly, takes time, and requires specific expertise. To give a an example related to the waste sector, it can be especially hard to differentiate between PET and PEHD plastic, one is slightly shinier than the other but it’s quite subtle and hard to tell the difference between the two materials on an image. Active Learning for image applications aims to find the images that have the most value from a pool of unlabelled images. These images are more likely to improve model performance at a better rate if we annotate them.

Why did we choose this paper?

It’s a paper closely linked to many industry use cases: improving an existing model while limiting annotation costs and time. While Active Learning has been a subject of research for years, this paper lays the foundation for active learning for deep neural networks working with image data.

How is it innovative?

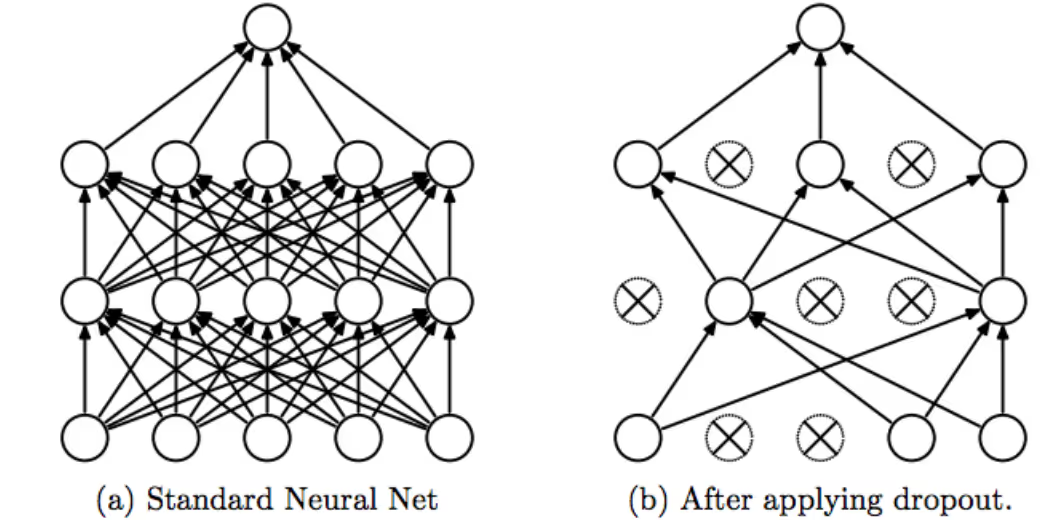

The authors propose a new method to quantify the uncertainty of a neural network using Drop Out, a technique usually used for regularization. Drop Out as a regularization technique consists of randomly shutting down some neurons during training to avoid overfitting. However, all the neurons are activated during inference. For more details, refer to Srivastava et al.

The idea of Gal et al. is to leverage Drop Out to generate several instances of the same network. During inference, they randomly shut down some neurons in each network instance using different seeds. They end up with predictions from multiple networks, each network having different activtions but are sampled from the same distribution. Once they instantiated these different networks, the idea is to compare their outputs. If, for a given image, the same network with different dropout seeds disagree on the prediction of an input (eg: one network predicts a cat, the other a dog, and another a car), then we should consider the original network uncertain about the image. Therefore, the model could have more to gain from adding the aforementioned input to its training set than an image the model is already certain about. This is called Bayesian Active Learning by Disagreement (BALD). The authors also propose other data acquisition methods using Drop Out to get instances from the same distribution of networks.

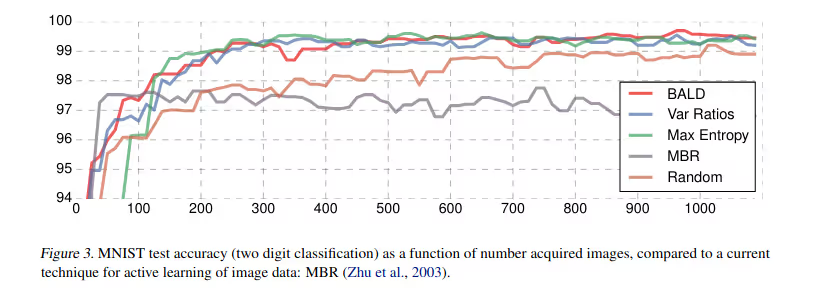

This paper focuses its benchmark on image data, using the MNIST dataset and ISIC 2016 Melanoma. It’s interesting to have a paper focus on Active Learning applied to images because these schemes can be dependent both on then used architecture and the data distribution.

The benchmark starts with training a network on a few random images, then they use BALD (or some other) method to determine the following ten images to choose from the image pool. Then they retrain a model and iterate. Their method shows better performance with fewer images compared to a random choice of images.

What are its limits?

Simple classification task

The authors focus their benchmarks on simple classification tasks and use very simple datasets. It would have been interesting to see more complex data and more complex tasks such as object detection, image segmentation, and pose estimation. Also the authors use a simple VGG16 architecture with some dropout layers at the end. It would have been interesting to see if their results would stay the same using other architectures such as ResNet.

Latency

This method requires running inference multiple times to estimate the uncertainty of a model. If it needs to implement Drop Out with five different seeds, it multiplies the latency required for one image by 5. At Lixo we cannot use this technique directly on our embedded devices since they are working in real-time.

Not Batch Aware

One other potential issue with this framework is that it ranks images one by one without taking into account the diversity across the pool of unlabelled images. It can be a problem when two images that are almost duplicates are both considered misleading for the model and, thus are both selected for the next training batch. Some new approaches are now taking this limitation into account to avoid selecting similar images like in Kirsch et al..

Batch size

The paper uses an active learning batch size of 10 images. At Lixo we are using active learning to know what are the most relevant images are to annotate. While we understand that a small batch size means a more optimal approach, it is very difficult and costly to manage several trainings with image annotation in between. In addition, the cost of training a model increases a lot with a small batch size, especially with more complex architectures than VGG16.

Conclusion

At Lixo, we want to continuously improve our model while limiting the cost/time used for annotation. Active Learning is an excellent framework to answer this problem, and the Gal and al. paper brings some great ideas on how to implement active learning using neural networks on image data. While the paper focuses on one simple classification task, at Lixo we confirmed that we can use the ideas of the paper on more specific and complicated tasks (such as object detection for the waste sector) and limit the number of annotated data needed to improve the model.

More generally, in an industrial setting, the key to improving your model’s performance is usually data-centric (focusing on the data instead of the model). Another complementary approach to leverage a huge quantity of unlabelled data is semi-supervised learning, but we’ll leave that for another blog post ;)

To learn more:

- Deep Bayesian Active Learning with Image Data, Yarin Gal, Riashat Islam, Zoubin Ghahramani (2017)

- Dropout: A Simple Way to Prevent Neural Networks from Overfitting, Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, Ruslan Salakhutdinov (2014)

- BatchBALD: Efficient and Diverse Batch Acquisition for Deep Bayesian Active Learning, Andreas Kirsch, Joost van Amersfoort, Yarin Gal (2019).